폴더구조

elk folder

- docker-compose.yml

- elasticsearch:

- Dockerfile

- alerting.zip

- config:

- elasticsearch.yml

- data:

- kibana:

- Dockerfile

- opendistroAlertingKibana-1.13.0.0.zip

- config:

- kibana.yml

- logstash :

- Dockerfile

- config:

- logstash.yml

- pipeline:

- logstash.conf

설정파일 정리

Elasticsearch build image

Dockerfile

opendistro download (github.com/opendistro-for-elasticsearch/alerting/releases)

FROM docker.elastic.co/elasticsearch/elasticsearch:7.10.2

COPY ./alerting.zip /opendistro/alerting.zip

RUN bin/elasticsearch-plugin install -b file:///opendistro/alerting.zip

docker build --tag elasticsearch-opds:7.10.2 .

Kibana build image

github.com/opendistro-for-elasticsearch/alerting-kibana-plugin/releases/tag/v1.13.0.0

Release Version 1.13.0.0 · opendistro-for-elasticsearch/alerting-kibana-plugin

Compatible with Kibana 7.10.2 Enhancements Add toast notification to handle errors when updating a destination (#232) Bug Fixes Filter out historical detectors on monitor creation page (#229) Fi...

github.com

Dockerfile

FROM docker.elastic.co/kibana/kibana:7.10.2

COPY ./opendistroAlertingKibana-1.13.0.0.zip /opendistro/opendistroAlertingKibana-1.13.0.0.zip

RUN bin/kibana-plugin install file:///opendistro/opendistroAlertingKibana-1.13.0.0.zip

docker build --tag kibana-opds:7.10.2 .

ElasticSearch config (elasticsearch.yml)

## Default Elasticsearch configuration from Elasticsearch base image.

## https://github.com/elastic/elasticsearch/blob/master/distribution/docker/src/docker/config/elasticsearch.yml

#

cluster.name: "docker-cluster"

network.host: 0.0.0.0

## X-Pack settings

## see https://www.elastic.co/guide/en/elasticsearch/reference/current/setup-xpack.html

#

#xpack.license.self_generated.type: trial

#xpack.security.enabled: true

#xpack.monitoring.collection.enabled: true

Kibana config(kibana.yml)

## Default Kibana configuration from Kibana base image.

## https://github.com/elastic/kibana/blob/master/src/dev/build/tasks/os_packages/docker_generator/templates/kibana_yml.template.js

#

server.name: kibana

server.host: 0.0.0.0

elasticsearch.hosts: [ "http://elasticsearch:9200" ]

monitoring.ui.container.elasticsearch.enabled: true

## X-Pack security credentials

#

#elasticsearch.username: elastic

#elasticsearch.password: changeme

logstash config(logstash.yml)

## Default Logstash configuration from Logstash base image.

## https://github.com/elastic/logstash/blob/master/docker/data/logstash/config/logstash-full.yml

#

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ]

## X-Pack security credentials

#

xpack.monitoring.enabled: true

#xpack.monitoring.elasticsearch.username: elastic

#xpack.monitoring.elasticsearch.password: changeme

logstash.conf

input {

tcp {

port => 5001

codec => json_lines

}

}

## Add your filters / logstash plugins configuration here

output {

elasticsearch {

hosts => "elasticsearch:9200"

index => "elk-opendistro"

}

stdout { codec => rubydebug }

}docker-compose.yml

version: '3.8'

services:

elasticsearch:

image: elasticsearch-opds:7.10.2

volumes:

- type: bind

source: "${PWD}/elasticsearch/config/elasticsearch.yml"

target: /usr/share/elasticsearch/config/elasticsearch.yml

read_only: true

- "${PWD}/elasticsearch/data:/usr/share/elasticsearch/data"

ports:

- "9200:9200"

- "9300:9300"

environment:

discovery.type: single-node

networks:

- elk

logstash:

image: docker.elastic.co/logstash/logstash:7.11.2

volumes:

- type: bind

source: "${PWD}/logstash/config/logstash.yml"

target: /usr/share/logstash/config/logstash.yml

read_only: true

- type: bind

source: "${PWD}/logstash/pipeline"

target: /usr/share/logstash/pipeline

read_only: true

ports:

- "5001:5001/tcp"

- "5001:5001/udp"

- "9600:9600"

networks:

- elk

depends_on:

- elasticsearch

kibana:

image: kibana-opds:7.10.2

volumes:

- type: bind

source: "${PWD}/kibana/config/kibana.yml"

target: /usr/share/kibana/config/kibana.yml

read_only: true

ports:

- "5601:5601"

networks:

- elk

depends_on:

- elasticsearch

networks:

elk:

external: trueDocker swarm service upload

Swarm init & network 생성

docker swarm init

docker network create -d overlay elk

docker stack deploy -c docker-compose.yml elk

Kibana dash board 확인

Open Distro for Elasticsearch가 kibana에서 보인다.

Service에서 로그 전송

build.gradle에 logstash로 전송할 인코더를 추가한다.

서비스에서 발생한 로그를 logstash로 전송해서 elasticsearch로 쌓는다.

compile 'net.logstash.logback:logstash-logback-encoder:6.6'

logback.xml에 logstash url을 써준다 (logstash.conf에 사용한 포트사용)

테스트중이라 log level을 DEBUG로 설정함

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>%-5level %d{HH:mm:ss.SSS} [%thread] %logger{36} - %msg%n</pattern>

</encoder>

</appender>

<appender name="stash" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>localhost:5001</destination>

<!-- encoder is required -->

<encoder class="net.logstash.logback.encoder.LogstashEncoder" />

</appender>

<root level="DEBUG">

<appender-ref ref="console"/>

<appender-ref ref="stash"/>

</root>

</configuration>

ElkController.java

package com.example.elk.controller;

import lombok.extern.slf4j.Slf4j;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@Slf4j

@RestController

public class ElkController {

@GetMapping("logTest")

public String logTest() {

log.debug("LOG ERROR %%%%%%");

return "hi";

}

}

Log에서 에러가 나오면 알람을 전송하려고 한다.

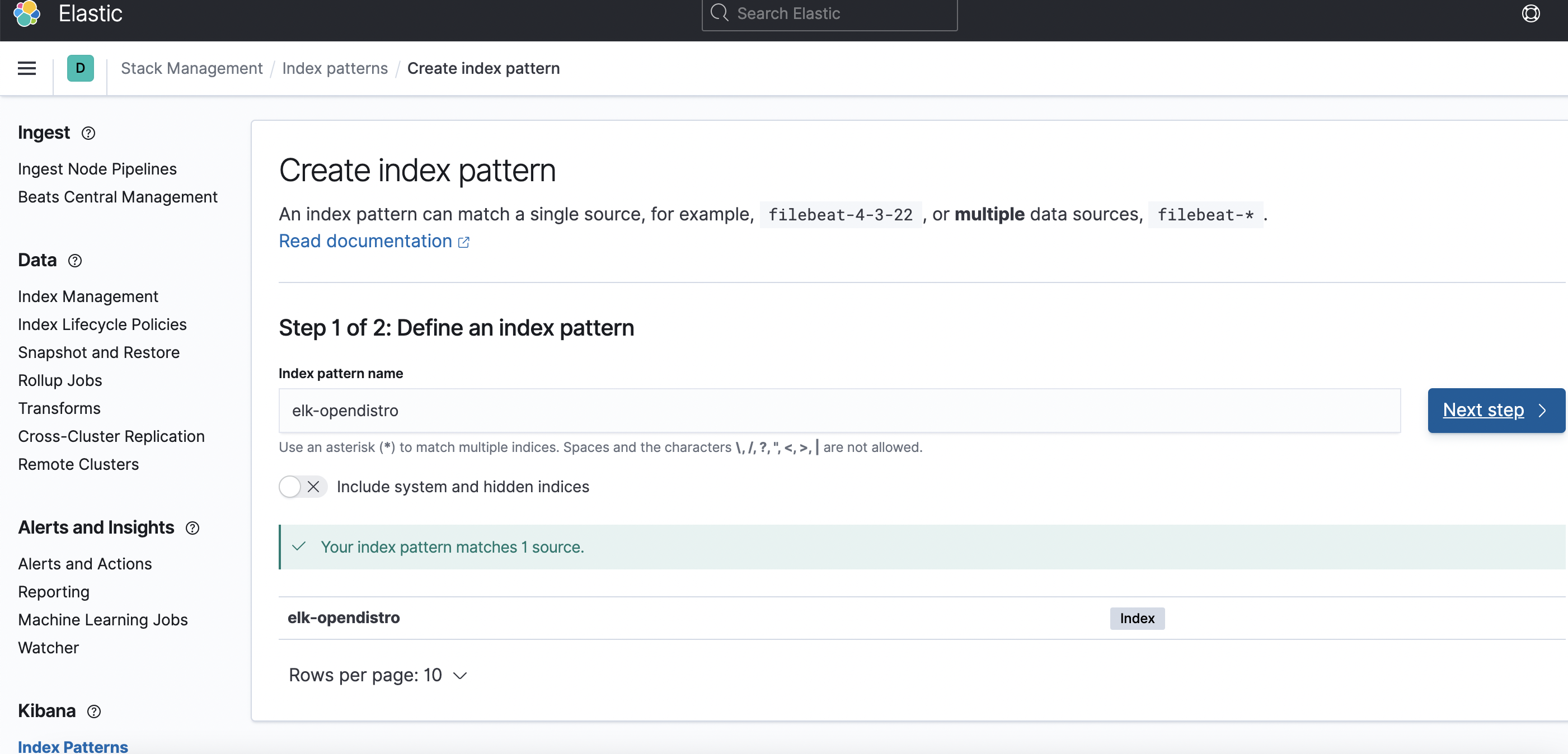

kibana에서는 logstash.conf에 사용한 index를 생성할 수 있다.

API를 호출하면 이런식으로 message에 에러가 남는다.

Open Distro 알람 전송(#slack)

Slack 채널을 개설했다.

설정및관리 > 앱관리 > Webhook 검색

수신 웹후크 > Slack에 추가

채널을 선택하면 된다.

Webhook URL을 확인할 수 있다.

kibana로 돌아와서 Alerting > Destinations에 slack을 추가해준다.

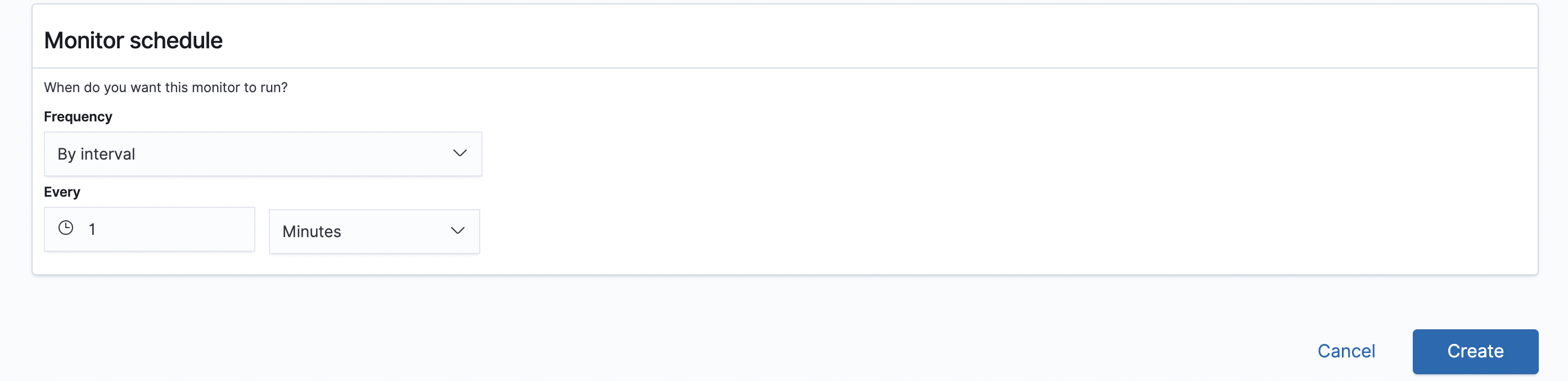

alert을 전송할 모니터 생성

Define extraction query로 에러가 발생하면 알람을 날리도록 쿼리를 만들었다.

1분에 한 번씩 체크하도록 한다.

trigger는 쿼리 결과 알람을 전송해야할 때 trigger 설정이다.

Add destination에 아까 만들어둔 slack을 넣으면 된다.

Tiriggered가 발생했을 때 모니터링할 수 있다.

실제 슬랙으로 alert이 온다.

'Docker' 카테고리의 다른 글

| Docker mariadb 설치 (0) | 2022.02.02 |

|---|---|

| Docker 에 rabbitmq 설치 (0) | 2021.08.05 |

| Docker swarm init token 조회 join 방법 (0) | 2021.03.23 |

| Docker local registry 생성 후 image push 해보자 (0) | 2021.03.19 |

| Docker private Repository push (0) | 2020.12.03 |